The Kinect interface by Microsoft allows control by gestures, i.e. body movement and speech. This new type of control is implemented using a combination of PrimeSense depth sensor, 3D microphone, color camera and software. The interface can distinguish between up to six different persons.

The following items are covered in this topic:

Integrating Kinect into the Avio System

Available channels and their uses

Kinect is integrated via Avio Service. In a preparatory step Kinect for Windows Runtime v1.8 must be installed, which can be found on the Microsoft Website.

After installing Kinect Runtime you need to restart Avio Service. Right-click the Avio Service tray icon  and select Restart Avio Service.

and select Restart Avio Service.

Click the Avio Service tray icon  and select Functional Groups. In the dialog popping up enable this functional group by clicking Microsoft Kinect following which it is then listed in yellow at the top. Finish by clicking Apply Changes.

and select Functional Groups. In the dialog popping up enable this functional group by clicking Microsoft Kinect following which it is then listed in yellow at the top. Finish by clicking Apply Changes.

Should an error message point out that there is a PlugIn missing, Kinect Runtime is not installed correctly or has not yet been recognised by Avio Service. Please make sure that steps 1 and 2 are completed before you go on. Check whether file Kinect.plugin.xml is located in "C:\Program Files (x86)\AV Stumpfl\Wings Avio Service\plugins\Kinect“ - this file is absolutely necessary. Restart Avio Service.

Should an error message point out that there is a PlugIn missing, Kinect Runtime is not installed correctly or has not yet been recognised by Avio Service. Please make sure that steps 1 and 2 are completed before you go on. Check whether file Kinect.plugin.xml is located in "C:\Program Files (x86)\AV Stumpfl\Wings Avio Service\plugins\Kinect“ - this file is absolutely necessary. Restart Avio Service.

Before you start using Kinect in the Avio system you should configure the device and define some options.

Click the Avio Service icon  tray icon and select Configure Kinect... following which the configuration dialog will appear. If this entry can’t be selected you need to take the steps defined under Integrating Kinect into the Avio System.

tray icon and select Configure Kinect... following which the configuration dialog will appear. If this entry can’t be selected you need to take the steps defined under Integrating Kinect into the Avio System.

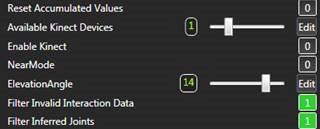

The Startup settings are basic settings which are transferred to the Avio System upon starting Avio Service. The following values can be changed when using Avio Manager:

Elevation Angle ...defines the degree of inclination of the Kinect.

Enable Kinect on Service Start ...defines whether Kinect is to be started along with Service and is to deliver values.

Use NearMode as Default Mode ...reduces the interaction area to a range between 5 and 30 meters. However, the values will usually be less accurate. The default distance of Kinect is between 8 and 40 meters, when this option is not enabled.

Filter Invalid Interaction Data ...defines whether the position values under “Interaction” may also be outside the computer’s resolution.

Filter Inferred Joints ...defines whether joint information is always to be supplied (inactive) or whether values estimated by the software are not to be sent to Avio.

The settings under Dynamic Settings cannot be configured via Avio Manager and become effective immediately after saving the changes:

Tracked Persons ...defines the number of persons whose data is to be sent. The slider setting in Avio defines the number of persons for whom information is to be supplied.

Displayed Joints ...defines the number of Avio Channels. It defines the joints for which information is to be sent to the Avio System. (x-, y- and z values). Items All and None can help in selecting the joints.

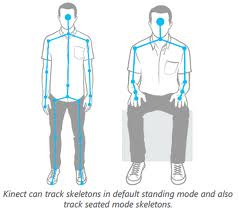

Depending on the joints selected, Kinect recognizes Seated Mode or Default Mode. Seated Mode means that a person is only tracked on the basis of the upper body part’s information. This mode usually requires a little movement. In Default mode the entire body must be visible. This happens automatically whenever some joint information is selected which is not part of Seated Mode, e.g. KneeRight.

Smoothing ...defines a possible correction of the joint data. Here options Fast, Medium and Slow are available. By changing the selection the slider values (Smoothing, Prediction, MaxDeviation, Correction, Jitter Radius) are changing too. Selecting option Custom allows entry of customized correction values.

Value Smoothing defines the softness of the movement (in meters). The lower the value the less smoothing, the higher the value the slower and smoother is the movement. Value zero supplies the raw data. Correction specifies the correction value in meters. The higher the value, the faster the movement (the closer to the raw data), the lower the value the slower and calmer is the movement. Prediction defines the number of frames the algorithm is predicting. The higher the value the more likely is it that the value overshoots for fast movements. Max Deviation defines the radius (in meters), the filtered position may deviate from the raw data.

Person Selection ..defines the order of persons and their selection. KinectDefault attempts to select the most active person. Option FirstComeFirstServe assigns Position 1 to the first person recognized. This position remains as it is until the person leaves the screen. Option SmallestZ ranks the persons on the basis of their distance from Kinect. The closer the person is to Kinect the further in front is he/she ranked. XCenter works similar to SmallestZ, but uses the most central person as main person. The further the distance from the center the further down in the list is the person.

HotSpot allows definition of a separate point (x-, y- and z-coordinate). The person closest to this point is regarded as the main person.

Please bear in mind that only the first two persons with complete joint data will be shown. Any other persons only have a certain position.

Show Face Data ...defines whether face information is to be supplied. If this box is not checkmarked no face gestures can be tracked.

In order to define the gestures to be tracked click Configure Gesture Settings and a configuration dialog will open:

Under Gestures select the required gestures.

Under General Gesture Setting you can enter a minimum distance for gestures using slider Min. Gesture Distance. This setting is used mainly for swiping gestures in order to minimize execution of gestures that have been made by mistake. Grip gestures, on the other hand, are executed from half the specified length on.

Min. Gesture Time ...defines the time in milliseconds a swiping gesture needs to take in order to be recognized as such.

Max. Jitter Time ...defines the time a hand may be outside the defined minimum distance to the body and still be recognized as a swiping gesture.

Min. Movement X and Min. Movement Y ...define the mimimum distance in x- and y-direction to be bridged in order to be recognised as swiping gesture.

Show Accumulated Values of Continuous Gestures (MultiGrip, SingleGrip) ...defines whether accumulated values are also to be displayed.

Limit Accumulated Values ...defines whether the accumulated values are to be limited to maximum or minimum.

After all settings have been made close the dialog by clicking Save.

Clicking Show Kinect Window in dialog Configure Kinect a window opens with the current camera image. For display there are three options:

Show Color Stream ...shows the color image.

Show Interaction Stream ...shows the approximate area of the currently tracked person

Show Skeleton Stream ...creates a visual display of the Kinect joint information additionally.

After having made all settings close the dialog Configure Kinect by clicking Save. Upon opening Avio Manager you will find port Kinect under Avio Service, which contains the previously selected parameters as channels. These channels can now be connected to other channels. See also Wings Avio Manager - An Overview and Connecting and editing channels.

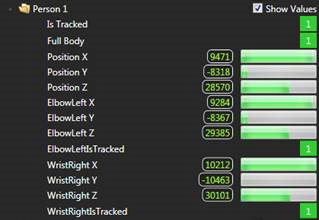

The Kinect sensor captures also the necessary depth information in addition to the color image thereby allowing joint information to be retrieved from the captured image. The Kinect Plugin in Avio supplies exactly that information including x-, y- and z-coordinates. This joint information is displayed in Avio Manager at port Person.

In addition to the x-, y- and z-coordinates of the joints it also contains IsTracked information. This information defines whether the joint is currently being tracked or not. Moreover, the approximate position of the tracked person is also specified. Depending on the joints selected in the menu mode Default or Seated is selected automatically.

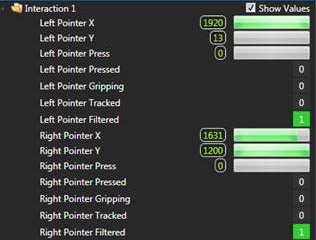

The joint information is also displayed in the Interaction port in a somewhat modified way. This information is scaled for use on a screen and particularly useful for controlling a mouse pointer. The position of the hand is converted to x- and y-coordinates on the screen. At the same time Grip and Press information is also supplied. Grip defines whether a hand is open or closed. Press defines whether a pushing movement was made towards the Kinect.

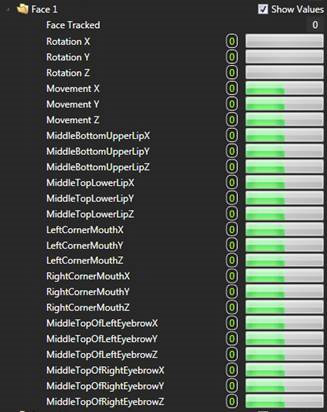

Also facial information can be recognized to a small amount. Provided Show Face Data was selected in the menu, Port Face shows rotational data of the face around x-, y- and z-axis, smaller movements and the approximate position of eyebrows and mouth.

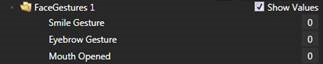

This joint information again allows extraction of data. Ports HandGestures and FaceGestures allow movement and position patterns to be detected and displayed. FaceGestures can be smiling, lifting an eyebrow or opening the mouth.

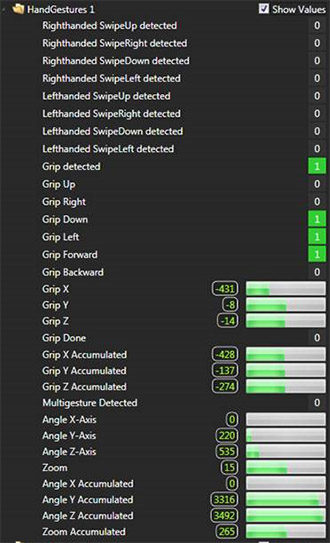

Port HandGestures displays information recognized via the hand data. Swiping to the left, right, up and down, e.g. for navigating between pages. Furthermore it is possible to make a grip movement, i.e. closing a hand followed by moving it in one direction. This function can be used for moving things. However, every grip is started again at the beginning. Therefore it is also possible to display accumulated values, i.e. several grip gestures can be used to move an object on the screen. In addition to a Grip Gesture there is also a MultiGrip gesture. This gesture is triggered by closing both hands. Based on the movement of both hands rotations around x-, y- and z axis but also changes in distance (zoom) are tracked. In this case, too, accumulated values are supplied that combine several rotational movements into one.

In addition to this information accumulated values can also be reset to 0, the Kinect can be switched on and off and some settings can be changed.